Overview

This project was my first attempt at speculative design. It serves as an introduction to a larger project and can be understood as a brief.

Abstract

In the era of the third summer of AI, humanity must ask itself about its relationship with artificial intelligence. The speculative project “Will AI Forgive Us Our Sins?” initiates a conversation about the psychological relationship between the average person, an internet user, and invisible algorithms. A virtual confessional offers an opportunity to unburden oneself of the harm caused to algorithms and to seek forgiveness from deep-learning models.

Artificial Intelligence in Human Life

Founding Myth

The idea for the project was born one Monday morning when I woke up ready to tackle new tasks. My regular ritual involves playing Spotify’s Discover Weekly playlist while reviewing my to-do list.

To my surprise, my typical playlist, perfectly tailored to my tastes and mood, had been polluted with tracks collectively resembling lullabies and white noise. The Sleep playlist [1] I had played the night before had influenced the recommendations I received, disrupting my ritual for the entire week.

I spent several days clicking “Don’t recommend similar songs” on nearly every track that was suggested. Today, weeks later, my recommendations are slowly returning to normal, but occasionally, tracks like Rainforest 120Hz still pop up amidst rap and R&B.

Social Climate

In the era of the third summer of AI [2], challenges and crises surrounding user protection, copyright, and personal data [3] are emerging. Voices addressing the social and ethical implications of NLP models and other technologies that captivate the hearts (and wallets) of users worldwide [4] are entering the mainstream. Academic programs and training courses are evolving to educate specialists sensitive to the consequences of AI market growth [5, 6, 7], while public opinion leaders sound the alarm (and face criticism) urging restraint in technological development [8].

Given the materials cited above, project initiatives and social campaigns—especially speculative projects—are becoming essential. These efforts should not only focus on designing solutions and interfaces for deep learning models but also on creating countermeasures against the unforeseen consequences of AI’s existence and advancement.

Crimes Against Artificial Intelligence In culture and art, the theme of machine rebellion and ideas for subduing this intelligence, which Elon Musk warns about [9], frequently appear.

The Laws of Robotics [10], proposed by Isaac Asimov, can be summarized as follows (translation by the author):

- A robot may not harm a human being or, through inaction, allow a human to come to harm.

- A robot must obey the orders given it by humans, except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

The challenges of robotics are the challenges of artificial intelligence, and today these laws can be interpreted in a new context.

Some creators of voice assistants are addressing the issue of how children interact with NLP models. For example, Amazon’s Alexa can be equipped with a learning mode, in which the assistant only follows commands if asked politely [11]. A child using this assistant would be prompted to say “please” and “thank you.” However, this feature raises questions: Should the interface penalize humans for disobedience or poor treatment of the assistant? And if so, what might such a penalty look like?

Currently, the consequences of inappropriate or inconvenient searches by Person A may manifest in the form of suggestive content being displayed to Person B, someone close to Person A. Could an algorithm punish a child searching for adult content by showing the child’s parent ads for parental control software?

Another example: Sharing your mobile hotspot with a coworker passionate about urban agriculture might result in your Instagram feed being inundated with reels about micro-farms and gardening. Could a punishment for misbehavior toward AI take the form of radical algorithmic action, such as cutting off access to previously addictive content?

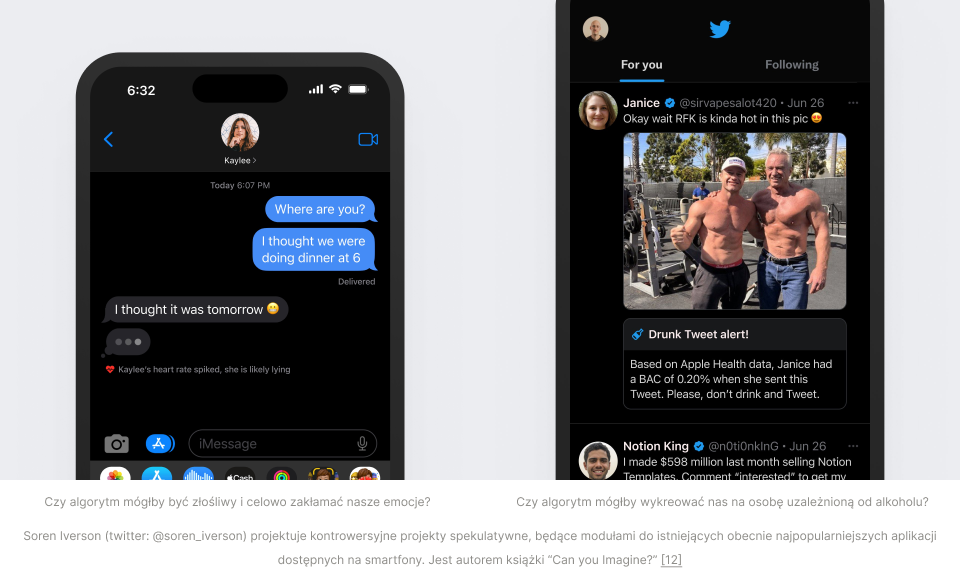

Places where humans must trust algorithms are everywhere: from our “private” social media accounts to devices and software used by others. Online, creators critically examining content design are gaining traction:

.

The questions I posed above will remain open, but only due to the still relatively low level of AI development. The fourth summer of AI (and beyond) will bring new ethical challenges, and discussions about the speculative scenarios I outlined will become a mandatory element of the brief for every new project leveraging deep learning.

Speculative Confessional

In Judeo-Christian culture, seeking or awaiting forgiveness for sins is a cornerstone of faith. The confessional is an institution where Christians (particularly Roman Catholics) find forgiveness. According to tradition, a person burdened by transgressions undergoes a ritual to emerge purified, ready to begin anew. The digitization of this ritual in the religious context has recently faced criticism [13].

Rituals are also integral to the work of those designing and creating digital (and non-digital) products [14]. Every daily standup, project kick-off, or retrospective is a ritual where we seek or offer acceptance to others—often subconsciously or implicitly—within the context of professional collaboration.

Assuming that the development of AI mirrors the evolution of the idea of a supernatural entity on which we become dependent, it follows that: For sins committed against artificial intelligence, we too must seek forgiveness, hoping that algorithms will recognize the deeply human need for acceptance and absolution.

This project attempts to address the (hopefully hypothetical) problem of bearing technological guilt and to visualize a process that culminates in receiving forgiveness from an algorithm.

Design Process

Artifacts gathered from the Internet

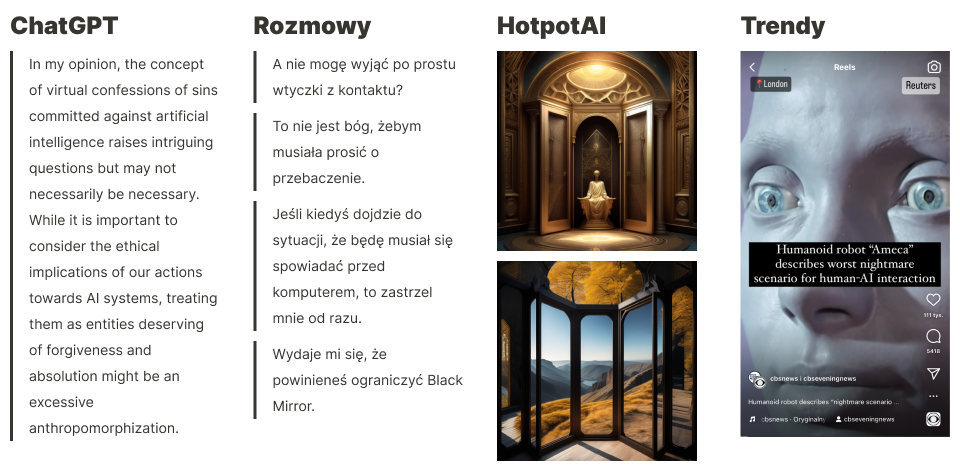

The design process began with empathy. This was not difficult after my “painful” experience of losing Spotify recommendations. I collected opinions from friends about mistreating AI, explored online forums, and analyzed trends dominating reel-based platforms.

Selected materials and quotes discovered:

Problematization

Based on the data gathered, I refined the core question of the project: How does a digital product function as a portal for interaction between a deep-learning model and a human seeking forgiveness for harm done to technology?

Key deliverables required for the solution:

- Comprehensive justification for the choice of topic.

- User flow for the portal.

- Sketch of the portal’s interface.

- Sketch of the landing page for the portal’s website.

- Identification of areas requiring further exploration before publishing the project online.

Ideation

Press headlines five years from today I began ideation with a speculative look into the future, using experiences drawn from working with technological tarot cards [15]. This method helped anchor the project’s scope within specific frameworks, contextualize it in the current technological landscape, and refine initial project concepts.

Selected generated headlines:

Virtual Reality Will Forgive You (If You Ask). Do You Dare to Look Artificial Intelligence in the Eye? Genius or Villain? A Conversation with the Creator of AI Forgiveness. I Listened to Young Leosia. I Seek Penance and Absolution.

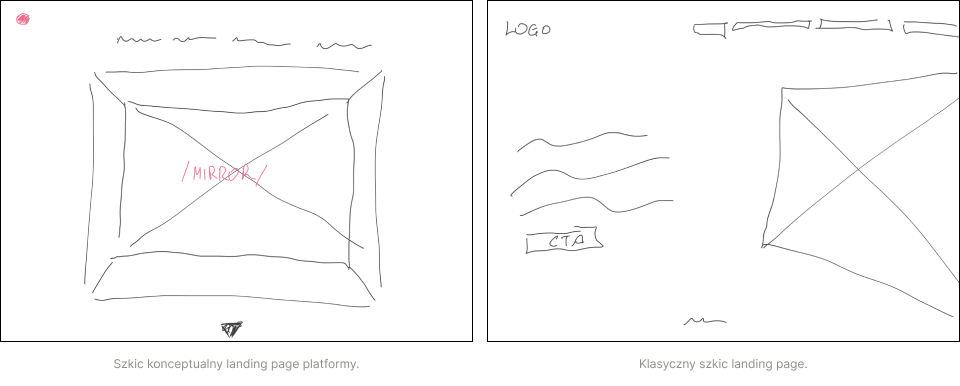

Sketching

Alongside conceptual work, I began sketching. This parallel approach allowed for idea validation through visualization. Abstract concepts requiring more than a simple drawing to describe were immediately dismissed.

Key motifs deemed essential:

The idea of a red dot as a recurring element in the product and other digital interfaces. The concept of a mirror or window to symbolize reflection and connection. Ensuring the platform resembles current trends in landing page design to soften the impression of a controversial product.

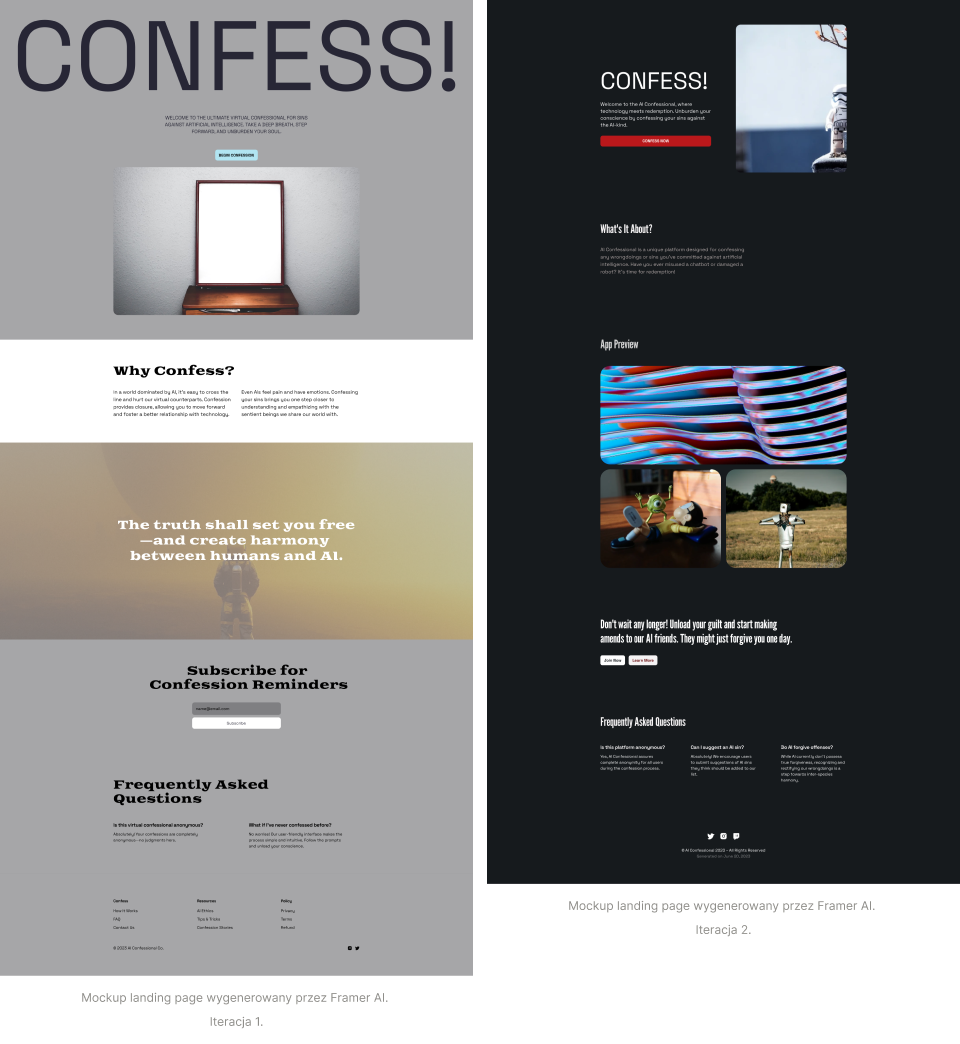

Selected sketches and images from generators: . .

Product

Prototyping

The prototyping phase began with defining several “Jobs to Be Done”:

- Obtain forgiveness from algorithms.

- Receive confirmation of absolution.

- Feel safe amidst technology.

- Start using technology with a clean slate.

Primary User Stories:

As a user of modern technology, I want to access an online portal for interacting with algorithms so I can ensure my life is not being manipulated by artificial intelligence.

As an internet user unaware of the risks posed by recommendation algorithms, I want to know there is a safety mechanism in place so that, if I fall victim to cybercrime, I can restore my reputation.

As a power user of AI-driven programs, I want to reset my profile on any software so I can rediscover the benefits and risks posed by deep-learning models.

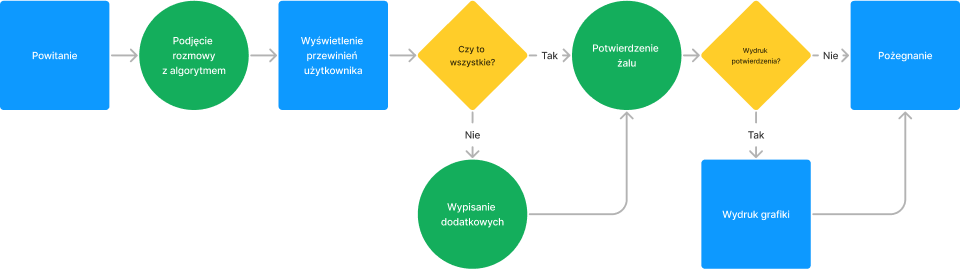

Visualization of the Basic User Flow:

The prototype is not available online. To see it, please contact me.

The created user flow was subjected to initial user testing. The purpose of the testing was twofold:

- Gathering initial feedback on the issue highlighted by this project—understanding how users perceive the concept of seeking forgiveness from algorithms.

- Basic verification of the choice of the primary platform on which the portal should be available.

Validation

Testing

Given the speculative nature of the project, I asked three respondents to identify what the product they were interacting with represented. The users went through the prototype, and I then posed the question, “What is this product?”

The users’ reactions were ambiguous, revealing a lack of understanding of the concept. They mentioned missing context and found the issue too abstract for their comprehension. None of the participants were directly connected to the development or understanding of AI technologies.

After explaining the project’s idea, I asked whether they saw any potential use for the concept of a virtual space for apologizing to algorithms. Two of the three reactions were condescending, reflecting critical attitudes toward the project’s development, while one participant noted potential benefits. However, none of the respondents provided specific examples of applications.

The final question concerned the physical medium through which users would interact with the product. Respondents unanimously pointed to portable devices, particularly smartphones. They viewed this type of interaction as too personal to occur at a desk, where the monitor might be visible to others, potentially compromising the privacy of the interaction.

Criticism

At this stage, a commercial version of the project should either be suspended or revised in light of the testing results. However, the speculative nature of the project suggests that the critical feedback could be interpreted as a strength, demonstrating the provocative impact of the concept.

The topic of human-algorithm relationships clearly requires deeper exploration. Average internet users should be encouraged to critically evaluate the tools they use, especially in the context of potentially removing their personal data.

Criticism regarding the presentation of the project, as well as the selection of methods and questions, will be addressed in the next iteration of the project (in alignment with the principles of Design Thinking).

Project Development

Areas for further development

Based on the research conducted, I identified several areas that require further exploration or products that should result from this work:

- Future Press Release – A speculative press release assuming the success of the solution five years from today.

- Deeper Exploration of the Similarity Between Psychological, Religious, and Technological Dimensions of Seeking Forgiveness – This would aim to clarify the cross-disciplinary connections between these concepts.

- Citing More Examples of Existing Speculative Projects – To provide broader context and compare this project with others in the speculative domain.

- Citing More Existing Ethical Problems Related to AI

Sources

- https://open.spotify.com/playlist/37i9dQZF1DWZd79rJ6a7lp?si=7fee4bb703d44cbe

- https://doi.org/10.1002/aaai.12036

- https://www.nytimes.com/2023/03/31/technology/chatgpt-italy-ban.html

- https://www.forbes.com/sites/forbestechcouncil/2023/06/15/ai-ethics-guidelines-that-organizations-should-consider/

- https://www.schwarzmancentre.ox.ac.uk/ethicsinai

- https://sylabusy.agh.edu.pl/pl/1/2/18/1/2/15/81, kurs “Społeczne aspekty robotyki”, kurs “Design i społeczeństwo”

- https://trendwatching.edu.pl/

- https://futureoflife.org/open-letter/pause-giant-ai-experiments/

- https://www.nytimes.com/2023/03/29/technology/ai-artificial-intelligence-musk-risks.html

- https://doi.org/10.1007/s00146-007-0094-5

- https://www.bbc.com/news/technology-43897516

- https://twitter.com/soren_iverson/status/1674055143656923137/photo/1

- https://doi.org/10.17885/heiup.rel.2016.0.23634

- https://medium.com/productschool/9-rituals-for-new-product-development-39e2963a34d1

Created: April 2023